The developments in synthetic intelligence (AI), notably with giant language fashions (LLMs), have revolutionized industries, powering every little thing from chatbots and digital assistants to automated content material technology and personalised advertising. Nonetheless, as LLMs grow to be extra advanced and built-in into enterprise operations, managing their lifecycle, deployment, and efficiency has grow to be a problem—that is the place LLMOps is available in.

LLMOps, brief for Massive Language Mannequin Operations, is a specialised framework that builds on conventional MLOps (Machine Studying Operations) however focuses particularly on the intricacies of LLMs. Simply as MLOps streamlined the event and deployment of machine studying fashions, LLMOps helps handle the operational complexities related to coaching, fine-tuning, and scaling giant language fashions.

On this information, we’ll take a deep dive into Massive Language Mannequin Operations, exploring its ideas, key instruments, challenges, and its future trajectory. Whether or not you’re an AI developer, a enterprise chief, or somebody new to the AI area, this text will assist you to perceive LLMOps and its significance in fashionable AI operations.

What’s LLMOps?

LLMOps, or Massive Language Mannequin Operations, refers back to the set of practices, instruments, and processes used to handle the lifecycle of huge language fashions (LLMs). LLMs, akin to GPT-3, BERT, and different state-of-the-art fashions, are educated on huge quantities of knowledge to carry out advanced duties like textual content technology, translation, summarization, query answering, and extra. Attributable to their scale, LLMs current distinctive challenges of their deployment, monitoring, scaling, and ongoing administration, that are addressed by LLMOps.

In essence, Massive Language Mannequin Operations is a specialised department of MLOps (Machine Studying Operations) that focuses particularly on the operational administration of huge language fashions. It’s designed to optimize the method of integrating, deploying, and sustaining LLMs in real-world purposes, guaranteeing that they perform at their greatest whereas assembly enterprise wants and complying with moral requirements.

Key Elements of LLMOps:

- Mannequin Coaching: The method of coaching giant language fashions, which entails amassing and making ready large datasets, utilizing distributed computing sources, and making use of superior machine studying algorithms.

- Mannequin Deployment: Deploying the educated mannequin in a manufacturing surroundings, typically by APIs or cloud-based platforms, to permit customers or purposes to work together with it.

- Mannequin Monitoring: Monitoring the mannequin’s efficiency over time to make sure that it continues to supply correct, dependable, and unbiased outcomes.

- Mannequin Upkeep: Constantly updating and fine-tuning fashions to maintain them related, correct, and efficient.

- Model Management and Governance: Guaranteeing correct administration of various variations of fashions, together with monitoring adjustments and sustaining compliance with authorized and moral requirements.

Massive Language Mannequin Operations is a vital a part of AI growth for firms that rely closely on LLMs for numerous use instances, from automated customer support to advanced knowledge evaluation.

LLMOps vs. MLOps: What’s the Distinction?

Whereas each MLOps and LLMOps share some widespread practices and instruments, their major distinction lies within the complexity and scale of the fashions they deal with.

MLOps:

- Focuses on machine studying fashions of all sizes, from easy regression fashions to deep studying fashions.

- Offers with automating the coaching, deployment, monitoring, and scaling of machine studying fashions throughout numerous domains (e.g., healthcare, finance).

- MLOps platforms can be utilized for all kinds of fashions, together with picture classification, advice techniques, and speech recognition.

LLMOps:

- Particularly designed for the distinctive wants of huge language fashions with billions of parameters and huge knowledge necessities.

- LLMOps handles challenges like distributed coaching, cost-efficient scaling, knowledge bias monitoring, and real-time updates.

- Extra centered on pure language processing (NLP) duties, akin to textual content technology, machine translation, query answering, and summarization.

Whereas MLOps is extensively used throughout numerous industries, Massive Language Mannequin Operations has emerged as a specialised extension geared toward managing the distinctive challenges of LLM coaching and deployment.

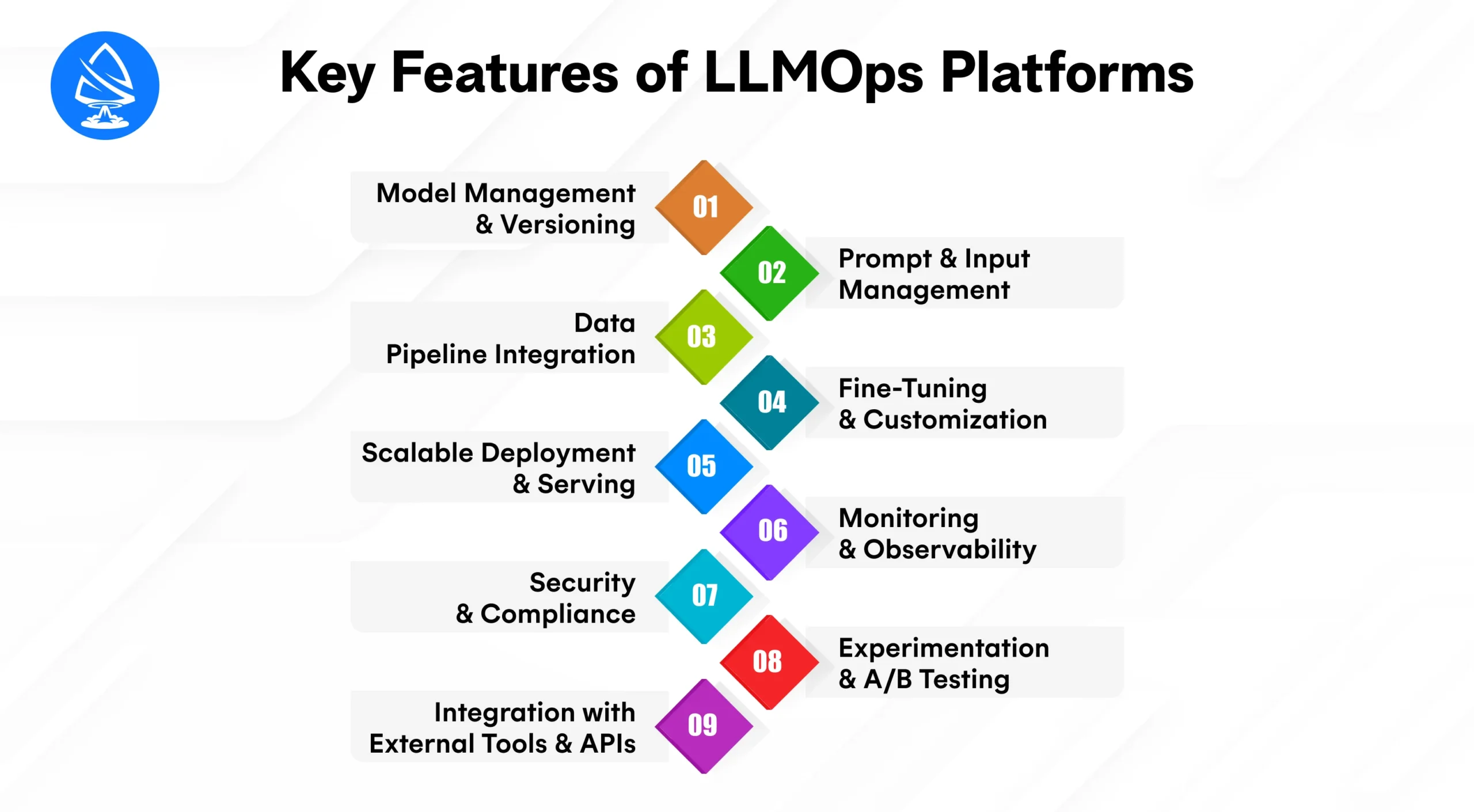

Key Options of LLMOps Platforms

LLMOps platforms are specialised techniques designed to handle the complete lifecycle of Massive Language Fashions (LLMs), from growth to deployment and monitoring. These platforms allow organizations to scale LLMs effectively, guarantee reliability, and keep moral AI practices. In contrast to normal MLOps platforms, LLMOps platforms cater to the distinctive challenges of LLMs, akin to their large measurement, advanced inference necessities, and sensitivity to immediate variations. Let’s dive into the important thing options that make these platforms important for contemporary AI operations.

1. Mannequin Administration and Versioning

Managing LLMs entails monitoring a number of variations of fashions, together with pre-trained, fine-tuned, and domain-specific fashions. Massive Language Mannequin Operations platforms present:

- Model management for fashions, much like Git for code.

- Seamless rollback capabilities to earlier mannequin variations in case of efficiency points.

- Mannequin registry techniques to retailer, categorize, and retrieve fashions simply.

Instance: If an organization fine-tunes GPT-4 for healthcare queries, LLMOps platforms make sure that the unique mannequin, the fine-tuned model, and any experimental fashions are all versioned and accessible for comparability.

2. Immediate and Enter Administration

LLMs are extremely delicate to prompts, and slight variations can produce drastically completely different outputs. LLMOps platforms embody:

- Immediate versioning and testing to trace effectiveness throughout completely different inputs.

- Templates and reusable prompts for widespread duties.

- Immediate efficiency analytics to optimize responses over time.

Instance: An AI-powered chatbot can use completely different prompts for buyer assist, FAQs, or troubleshooting. LLMOps platforms enable groups to handle these prompts systematically and monitor which variations produce the perfect outcomes.

3. Information Pipeline Integration

LLMs require giant volumes of high-quality knowledge for coaching and fine-tuning. LLMOps platforms facilitate:

- Automated knowledge ingestion from a number of sources.

- Information validation and cleansing pipelines to make sure high quality.

- Help for structured and unstructured knowledge, together with textual content, JSON, or paperwork.

Instance: A authorized AI software could feed contracts, case histories, and laws into the LLM pipeline. LLMOps platforms guarantee the information is preprocessed, validated, and prepared for fine-tuning with out handbook intervention.

4. Nice-Tuning and Customization

Nice-tuning LLMs for particular duties is resource-intensive. LLMOps platforms present:

- Managed fine-tuning pipelines with monitoring of useful resource utilization.

- Hyperparameter optimization to maximise mannequin efficiency.

- Process-specific customization with out compromising the bottom mannequin.

Instance: An organization constructing a medical analysis assistant can fine-tune a normal LLM with medical literature to enhance accuracy and relevance in its suggestions.

5. Scalable Deployment and Serving

LLMs are computationally heavy and require optimized serving infrastructure. Key options embody:

- Cloud-native deployment for horizontal scaling.

- Low-latency inference optimizations utilizing caching, batching, or mannequin compression.

- Multi-region deployment for international accessibility.

Instance: A buyer assist AI should reply in real-time throughout the globe. LLMOps platforms make sure the mannequin runs effectively, minimizing latency and maximizing uptime.

6. Monitoring and Observability

Monitoring LLMs goes past conventional metrics like accuracy; it consists of:

- Output high quality monitoring, together with relevance, completeness, and bias detection.

- Useful resource utilization monitoring, akin to GPU/TPU utilization and reminiscence consumption.

- Actual-time logging and alerts to detect anomalies or degradation in efficiency.

Instance: If an LLM begins producing biased or unsafe responses, the platform can set off alerts and permit builders to intervene instantly.

7. Safety and Compliance

LLMs typically deal with delicate knowledge, making safety crucial. LLMOps platforms present:

- Position-based entry management (RBAC) to safe mannequin entry.

- Information encryption in transit and at relaxation.

- Audit trails and compliance reporting to fulfill regulatory requirements like HIPAA or GDPR.

Instance: A healthcare LLM should guarantee affected person knowledge is totally protected whereas sustaining a document of who accessed the mannequin or datasets for accountability.

8. Experimentation and A/B Testing

LLMOps platforms facilitate steady enchancment by supporting:

- Parallel mannequin experimentation to check a number of configurations.

- A/B testing of prompts or fine-tuned fashions to establish optimum efficiency.

- Suggestions loops from person interactions to enhance mannequin outputs dynamically.

Instance: A digital tutor LLM can check completely different educating types or explanations. LLMOps platforms acquire person suggestions and decide which strategy improves studying outcomes.

9. Integration with Exterior Instruments and APIs

Trendy LLMOps platforms are designed to combine seamlessly with:

- Enterprise purposes like CRM, ERP, or buyer assist techniques.

- Analytics platforms to trace efficiency metrics.

- Automation instruments for immediate technology, mannequin updates, or workflow orchestration.

Instance: An AI writing assistant can combine with Google Docs or Microsoft Phrase by way of APIs, leveraging the LLM by an LLMOps-managed pipeline.

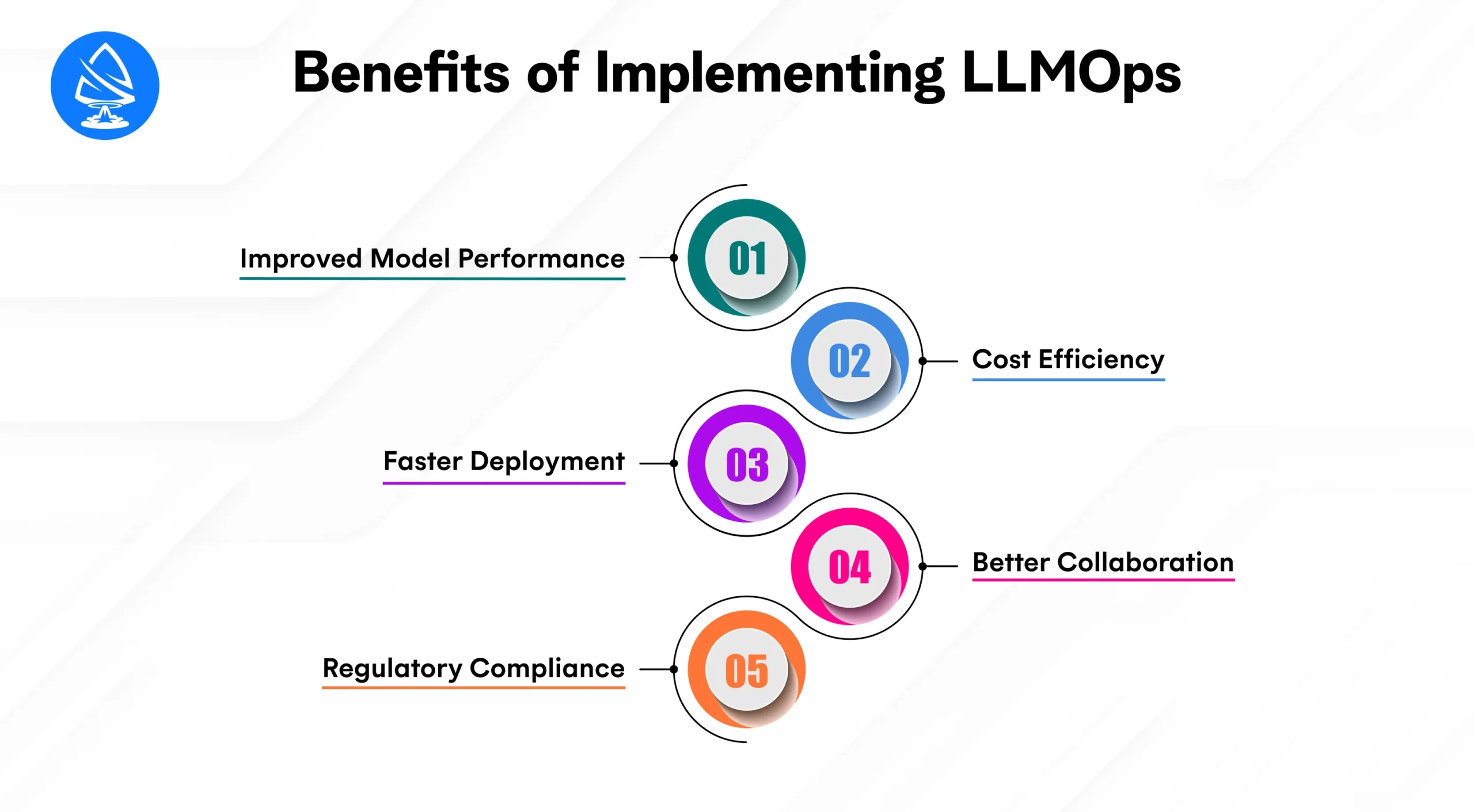

Advantages of Implementing LLMOps

Implementing LLMOps inside a corporation affords quite a few advantages that enhance the efficiency, scalability, and effectivity of huge language fashions. Some key benefits embody:

1. Improved Mannequin Efficiency

By automating repetitive duties and incorporating real-time monitoring, LLMOps ensures that fashions constantly ship optimum efficiency. Steady fine-tuning primarily based on person suggestions, new knowledge, or altering circumstances ensures increased accuracy and higher predictions.

2. Value Effectivity

Coaching giant language fashions might be costly. LLMOps platforms optimize useful resource utilization, lowering cloud prices and compute energy by making the coaching and inference course of extra environment friendly.

3. Sooner Deployment

With automated workflows for coaching, testing, and deployment, organizations can roll out LLMs sooner and keep forward within the aggressive AI panorama.

4. Higher Collaboration

LLMOps platforms allow collaboration amongst groups of knowledge scientists, engineers, and AI specialists by streamlining workflows and making model management and efficiency monitoring simpler.

5. Regulatory Compliance

By imposing governance protocols, LLMOps ensures that the fashions are compliant with knowledge privateness laws and moral requirements, stopping misuse or bias in AI-generated content material.

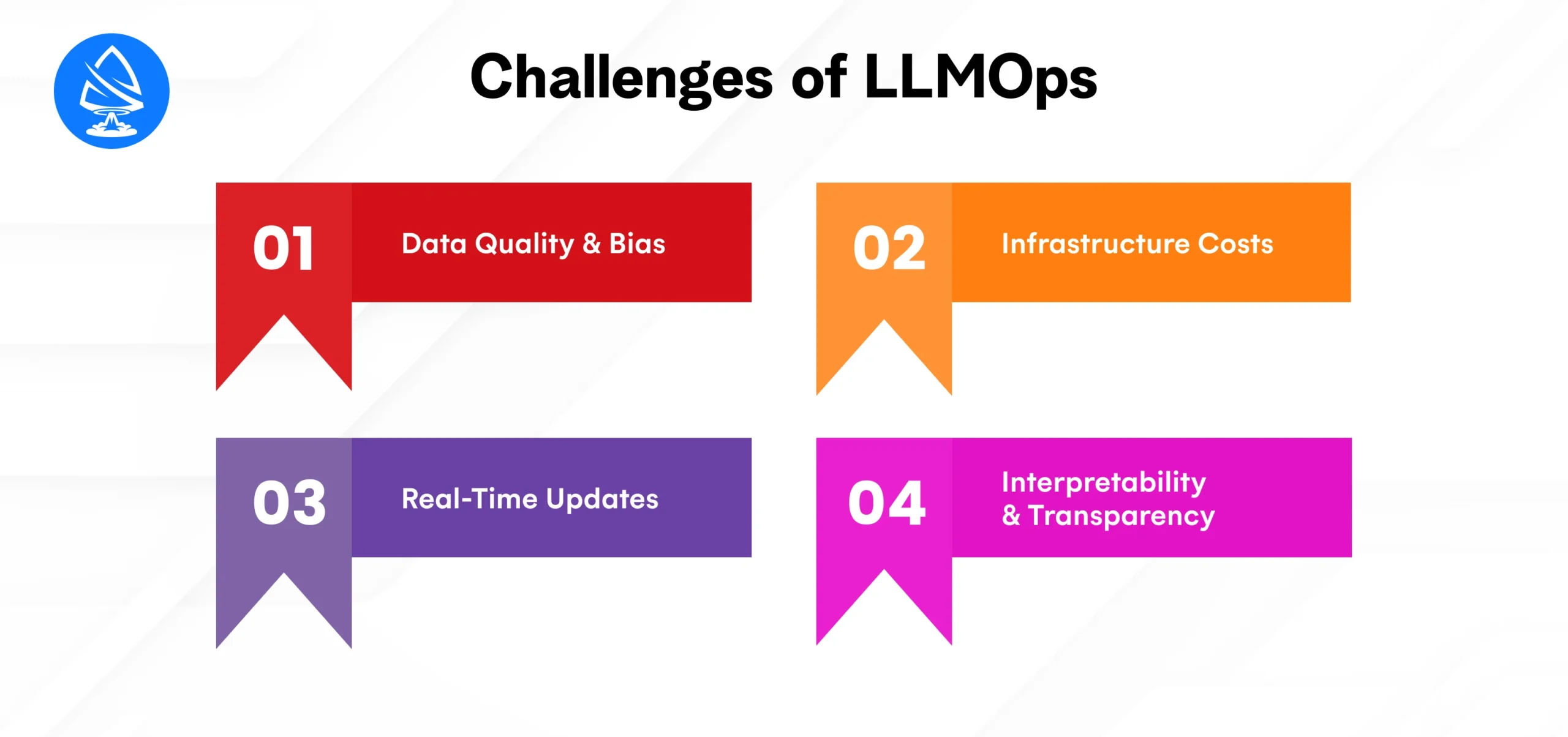

Challenges of LLMOps

Whereas LLMOps brings immense advantages, it additionally faces particular challenges that have to be addressed for optimum efficiency:

1. Information High quality and Bias

LLMs are sometimes educated on huge quantities of knowledge, which might comprise biases. Guaranteeing that the fashions are truthful and non-discriminatory is a key problem in LLMOps.

2. Infrastructure Prices

Coaching and deploying giant fashions demand substantial computational sources, resulting in excessive infrastructure prices. Managing and optimizing cloud companies and computing energy successfully is essential.

3. Actual-Time Updates

LLMs typically require steady fine-tuning, and maintaining the mannequin updated with new knowledge and person suggestions in real-time might be advanced.

4. Interpretability and Transparency

Understanding and explaining why an LLM makes a sure determination remains to be a big problem. Customers and builders alike require larger interpretability of AI outputs to make sure belief and transparency.

The Way forward for LLMOps

Because the demand for AI-driven purposes continues to rise, the function of LLMOps will solely develop. Listed here are some rising traits:

1. Automation of Finish-to-Finish AI Workflows

Future LLMOps platforms will automate not solely coaching and deployment but in addition knowledge assortment, preprocessing, and mannequin analysis to streamline operations even additional.

2. Enhanced AI Governance

As moral AI turns into a precedence, LLMOps will evolve to incorporate stronger governance frameworks that guarantee compliance with evolving legal guidelines and laws.

3. Elevated Integration with Trade-Particular Options

LLMOps will more and more combine with industry-specific options (e.g., healthcare, finance, training), permitting firms to deploy AI options tailor-made to their distinctive wants.

4. Higher AI-Mannequin Explainability

With a give attention to explainable AI, future LLMOps platforms will develop improved instruments for explaining how and why sure choices are made by the AI, bettering person belief and adoption.

Conclusion

LLMOps is ready to grow to be a cornerstone within the growth and deployment of AI-driven purposes, notably for Massive Language Fashions. With its skill to streamline mannequin operations, improve scalability, and guarantee regulatory compliance, Massive Language Mannequin Operations will play a crucial function in enabling companies to unlock the complete potential of AI.

Whether or not you’re within the early phases of AI adoption or already working with LLMs, understanding LLMOps and leveraging the best instruments will assist you to construct smarter, extra environment friendly, and extra dependable AI options.

If you happen to’re able to develop your personal LLMOps platform or combine LLMOps into your online business, think about working with an AI growth firm in USA or rent AI builders to information your undertaking to success.

Need to begin constructing your LLMOps platform? Use our Value Calculator to estimate the prices and get began immediately!

Regularly Requested Questions

1. What’s LLMOps?

LLMOps refers back to the operational practices and instruments used to deploy, monitor, and handle Massive Language Fashions (LLMs) in real-world purposes.

2. How does LLMOps differ from MLOps?

Whereas each cope with machine studying fashions, LLMOps is particularly designed for managing the distinctive challenges of huge language fashions, together with excessive computational demand and sophisticated coaching necessities.

3. Can LLMOps be used for each NLP and pc imaginative and prescient duties?

LLMOps is primarily centered on pure language processing (NLP) duties, however the underlying ideas may also be utilized to different AI duties, akin to pc imaginative and prescient, with changes.

4. What are some common LLMOps platforms?

Some LLMOps platforms embody TensorFlow Prolonged (TFX), Kubeflow, and MLflow, which offer scalable options for mannequin deployment, monitoring, and lifecycle administration.

5. What are the largest challenges in LLMOps?

Challenges embody knowledge bias, excessive infrastructure prices, and guaranteeing mannequin interpretability.

6. What industries profit from LLMOps?

Industries akin to finance, healthcare, e-commerce, and leisure are leveraging LLMOps for enhanced customer support, knowledge evaluation, and personalised experiences.

7. How do I implement LLMOps in my group?

To implement LLMOps, you can begin by evaluating your present machine studying infrastructure, selecting the best AI fashions, and integrating LLMOps frameworks for deployment, monitoring, and upkeep.

8. What’s the way forward for LLMOps?

The way forward for LLMOps lies in elevated automation, higher governance, and enhanced AI transparency, enabling companies to deploy giant language fashions extra effectively and responsibly.