The world of pure language processing (NLP) has developed considerably over the previous couple of years, and one of many driving forces behind this transformation is the event of Generative Pretrained Transformers (GPT). GPT mannequin, powered by OpenAI’s structure, has revolutionized how machines perceive and generate human language, opening up prospects for every little thing from chatbots to content material creation and code era.

Constructing your personal GPT mannequin generally is a rewarding and empowering expertise, whether or not you’re a developer, researcher, or entrepreneur trying to discover the potential of AI. With developments in expertise and the provision of frameworks and instruments, making a customized GPT mannequin is now extra accessible than ever.

On this complete information, we’ll stroll you thru the steps wanted to construct your personal GPT mannequin, the instruments you have to, and greatest practices to make sure success in your AI journey. Partnering with AI software improvement providers will help streamline the method and make sure the profitable improvement of your mannequin.

What’s GPT?

GPT stands for Generative Pretrained Transformer, a sort of deep studying mannequin used for pure language processing (NLP) duties equivalent to textual content era, language translation, summarization, query answering, and extra. GPT fashions are primarily based on the transformer structure, which is a machine studying framework that has develop into some of the profitable approaches for understanding and producing human language.

Developed by OpenAI, GPT fashions are pretrained on large datasets and fine-tuned for particular duties. The primary power of GPT is its potential to generate coherent and contextually related textual content primarily based on a given immediate. Over time, GPT fashions have developed, with newer variations (like GPT-3 and GPT-4) being more and more able to dealing with extra advanced language duties with greater accuracy and fluency.

Key Options of GPT

Generative:

GPT is a generative mannequin, which suggests it may produce new content material (textual content) primarily based on a given enter. For instance, you possibly can present it with a sentence, and it may generate the subsequent few phrases, and even total paragraphs, that coherently proceed the textual content.

Pretrained:

GPT fashions are pretrained on huge quantities of textual content information from the web, books, articles, and different sources. This implies they have already got a broad understanding of language patterns, grammar, and context earlier than getting used for particular duties. The pretrained mannequin is then fine-tuned for extra particular functions, enhancing its efficiency in sure domains.

Transformer Structure:

GPT makes use of the transformer structure, which permits it to effectively course of long-range dependencies in textual content. Not like conventional fashions that course of textual content sequentially, transformers take a look at the complete sequence of phrases concurrently, making them extra highly effective at understanding context and relationships between phrases over lengthy distances.

Contextual Understanding:

GPT fashions excel at understanding the context of phrases inside a sentence, paragraph, or perhaps a entire doc. This is without doubt one of the key causes GPT can generate human-like textual content—as a result of it doesn’t simply predict the subsequent phrase primarily based on the instantly previous phrase, however as an alternative, makes use of the complete context offered within the enter.

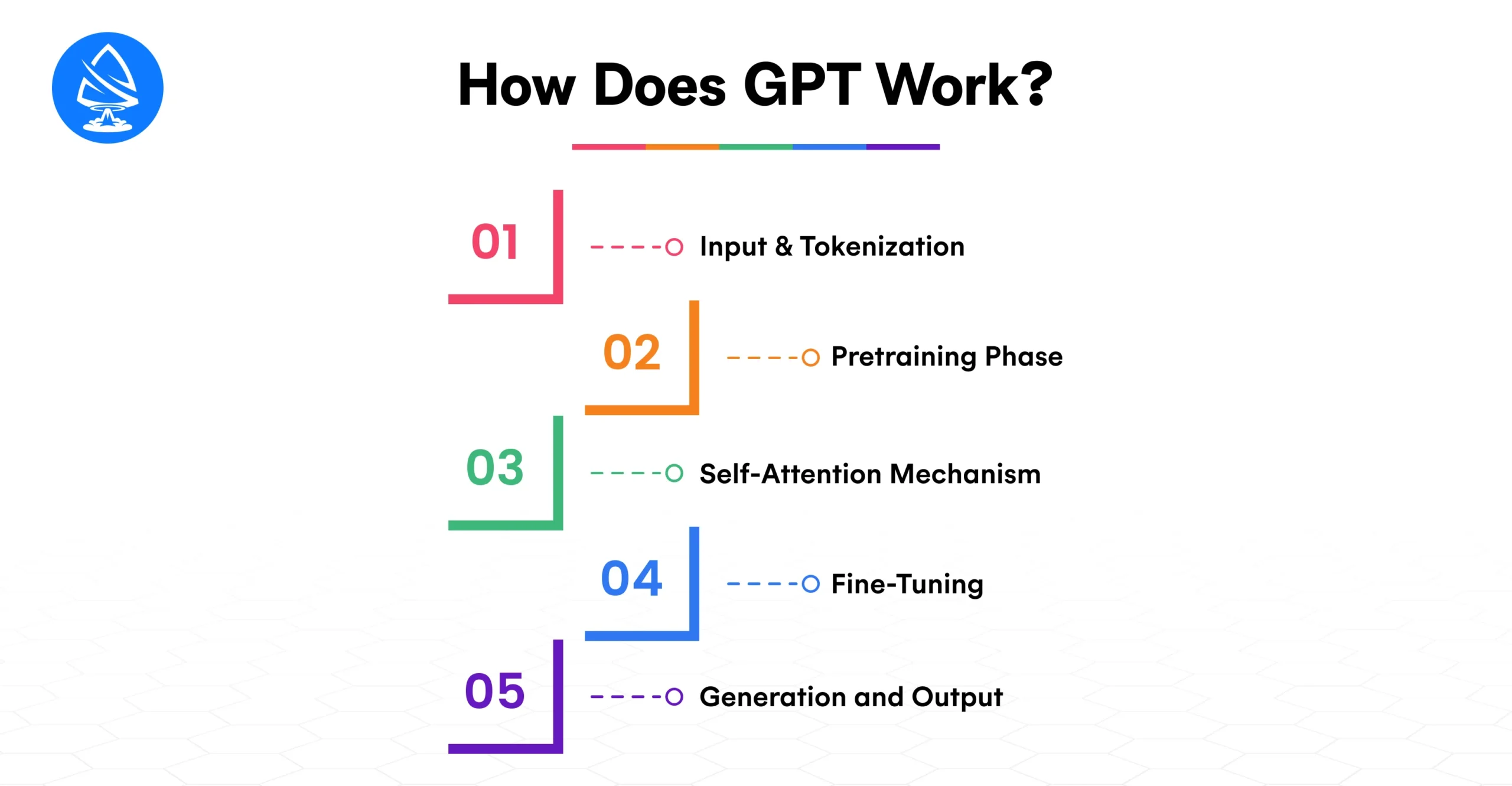

How Does GPT Work?

1. Enter and Tokenization:

While you present a immediate to a GPT mannequin, step one is tokenization. Tokenization breaks down your enter textual content into smaller models (often phrases or subwords) that the mannequin can perceive. Every token is assigned a novel numerical worth, permitting the mannequin to course of the enter in a kind that it may work with.

2. Pretraining Part:

Throughout pretraining, GPT is uncovered to huge quantities of textual content information from books, web sites, and different sources. On this section, the mannequin learns to foretell the subsequent phrase in a sentence by analyzing context. For instance, given the phrase “The solar rises within the,” the mannequin learns that “east” is the more than likely subsequent phrase. By repeating this activity on a big scale, GPT learns a deep understanding of language and its construction.

3. Self-Consideration Mechanism:

The self-attention mechanism is a key part of the transformer structure. It permits GPT to deal with completely different components of the enter textual content when making predictions. For instance, within the sentence “The cat sat on the mat,” self-attention allows the mannequin to grasp the connection between “cat” and “sat” despite the fact that they don’t seem to be subsequent to one another within the sentence. This potential to seize long-range dependencies makes GPT fashions significantly highly effective.

4. Advantageous-Tuning:

After pretraining, GPT may be fine-tuned on extra particular datasets associated to explicit duties, equivalent to query answering, language translation, or textual content summarization. Advantageous-tuning adjusts the mannequin’s weights primarily based on task-specific information, serving to it specialize and enhance efficiency in these duties.

5. Technology and Output:

When producing textual content, GPT predicts one token at a time primarily based on the context offered by the enter and former tokens. It continues producing tokens till it reaches a stopping level, equivalent to a most size or an end-of-sequence token. The result’s a coherent and contextually related piece of textual content that aligns with the immediate.

Why Construct Your Personal GPT Mannequin?

Whereas OpenAI’s GPT fashions like ChatGPT provide unbelievable capabilities, there are a number of the explanation why you may wish to construct your personal GPT mannequin:

- Customization: Tailor the mannequin to particular duties or industries, equivalent to buyer help, content material creation, or healthcare.

- Management Over Knowledge: Prepare the mannequin together with your proprietary datasets for extra exact outputs.

- Value-Effectiveness: Relying in your use case, constructing your personal GPT mannequin can save on API utilization prices.

- Innovation: Having management over the event course of means that you can experiment with the most recent AI strategies and improvements.

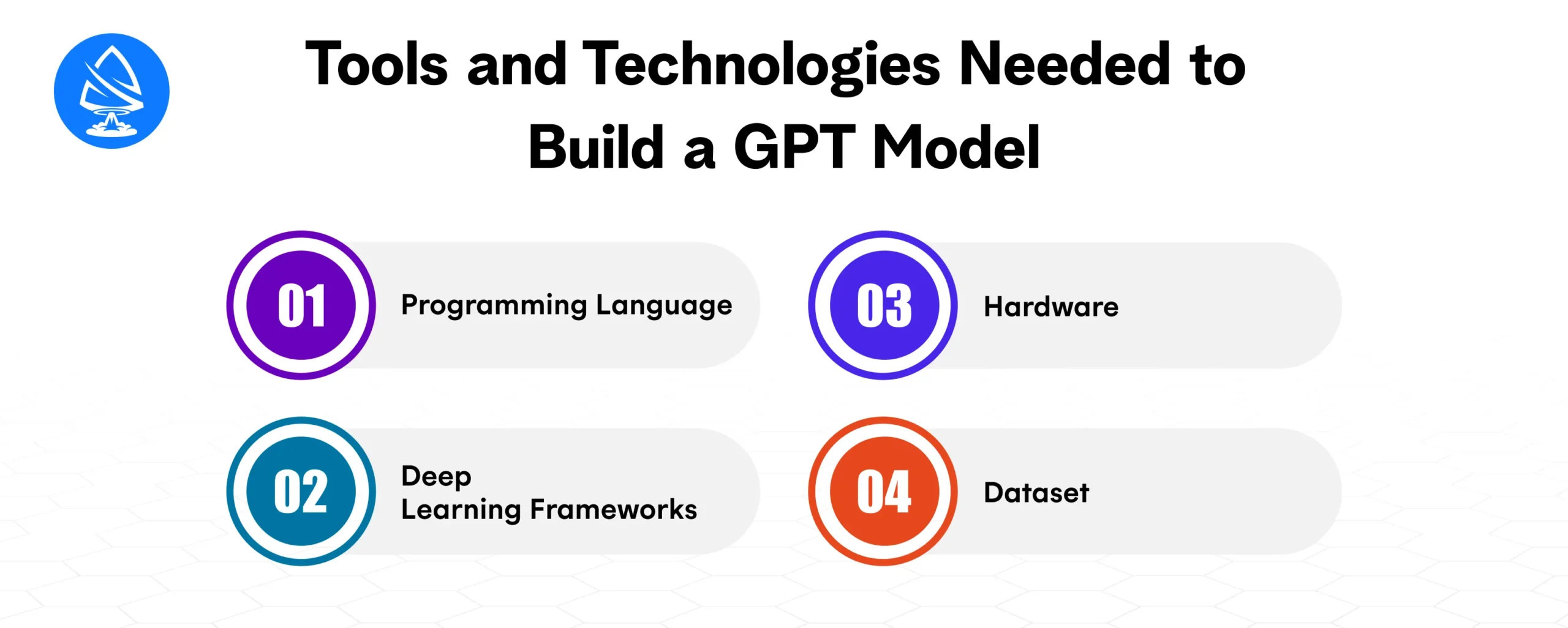

Instruments and Applied sciences Wanted to Construct a GPT Mannequin

1. Programming Language: Python

Python is the go-to programming language for constructing and deploying GPT fashions because of its intensive help for machine studying and information science libraries. Libraries like TensorFlow, PyTorch, and Hugging Face’s Transformers make it simpler to construct, prepare, and fine-tune deep studying fashions.

2. Deep Studying Frameworks

- PyTorch: This is without doubt one of the hottest deep studying frameworks used to construct transformer fashions, together with GPT. PyTorch gives dynamic computation graphs, which makes it simpler to experiment and debug.

- TensorFlow: One other highly effective framework that helps mannequin constructing and deployment. TensorFlow has a extra static graph method, which is fitted to manufacturing environments.

- Hugging Face’s Transformers: This library gives pre-built transformer fashions, together with GPT-2, GPT-3, and different language fashions. It’s a superb alternative for anybody trying to fine-tune current fashions.

3. {Hardware}: GPUs and TPUs

Coaching a GPT mannequin requires substantial computational sources. For coaching from scratch, utilizing Graphics Processing Models (GPUs) or Tensor Processing Models (TPUs) is crucial. These accelerators considerably pace up the coaching course of, decreasing the time it takes to construct and deploy your mannequin.

- Cloud Platforms: Platforms like Google Cloud, AWS, and Microsoft Azure provide highly effective GPU and TPU situations for mannequin coaching.

4. Dataset: Gathering and Preprocessing Knowledge

A big and various dataset is essential for coaching a high-quality GPT mannequin. Knowledge can come from varied sources, together with:

- Public Datasets: Open-source datasets like Wikipedia, BooksCorpus, and Widespread Crawl are sometimes used to coach GPT fashions.

- Area-Particular Datasets: For those who’re constructing a customized GPT mannequin for a selected trade, you could want to collect domain-specific information.

Knowledge Preprocessing:

- Tokenization: Breaking textual content into tokens (phrases or subwords) is a vital step in making ready the information for GPT coaching.

- Cleansing Knowledge: Eradicating irrelevant content material (e.g., HTML tags, particular characters) ensures that the information is clear and appropriate for coaching.

Steps to Construct Your Personal GPT Mannequin

Step 1: Put together Your Atmosphere

Arrange your improvement setting with the required instruments:

- Python: Set up the most recent model of Python and arrange a digital setting to handle dependencies.

- Deep Studying Libraries: Set up PyTorch or TensorFlow, in addition to Hugging Face’s Transformers library.

pip set up torch tensorflow transformers

- {Hardware} Setup: For those who’re coaching the mannequin regionally, guarantee your system has entry to GPUs or TPUs. In any other case, you need to use cloud providers for useful resource scalability.

Step 2: Knowledge Assortment and Preprocessing

Collect a big dataset related to your activity. As an example, if you wish to create a GPT mannequin for monetary evaluation, you’ll want monetary information articles, studies, and different associated information. After you have the information, proceed with tokenization and cleansing.

- Instance: Use Hugging Face’s datasets library to simply load and preprocess datasets.

from datasets import load_dataset

dataset = load_dataset(“wikipedia”, “20200501.en”)

Step 3: Mannequin Choice and Customization

You may have two choices:

Prepare From Scratch: You probably have entry to large computational sources and huge datasets, you possibly can prepare the mannequin from scratch. This includes initializing a transformer mannequin and coaching it in your information.

Advantageous-Tuning an Present Mannequin: For many use instances, fine-tuning a pre-trained mannequin (e.g., GPT-2, GPT-3) in your particular dataset is extra sensible. This method saves time and computational sources.

Instance: Advantageous-tune a pre-trained GPT-2 mannequin in your customized dataset utilizing Hugging Face’s Coach API:

from transformers import GPT2LMHeadModel, GPT2Tokenizer, Coach, TrainingArguments

mannequin = GPT2LMHeadModel.from_pretrained(“gpt2”)

tokenizer = GPT2Tokenizer.from_pretrained(“gpt2”)

train_dataset = tokenizer(information, return_tensors=”pt”, padding=True, truncation=True)

training_args = TrainingArguments(output_dir=”./outcomes”, num_train_epochs=3)

coach = Coach(mannequin=mannequin, args=training_args, train_dataset=train_dataset)

coach.prepare()

Step 4: Mannequin Analysis and Testing

After coaching the mannequin, it’s important to judge its efficiency. Use metrics like perplexity or BLEU rating to judge the mannequin’s textual content era capabilities.

- Instance: Take a look at the mannequin’s output by producing textual content primarily based on a immediate:

input_ids = tokenizer.encode(“As soon as upon a time”, return_tensors=”pt”)

generated_text = mannequin.generate(input_ids, max_length=50, num_return_sequences=3)

print(tokenizer.decode(generated_text[0], skip_special_tokens=True))

Step 5: Deploy Your GPT Mannequin

When you’ve fine-tuned your GPT mannequin, you possibly can deploy it in varied methods:

- Internet Interface: Construct a consumer interface (UI) utilizing frameworks like Flask or Streamlit to work together together with your mannequin.

- API: Create an API utilizing FastAPI or Flask to permit customers to ship textual content inputs and get responses from the mannequin.

Greatest Practices for Constructing GPT Fashions

Constructing a GPT (Generative Pretrained Transformer) mannequin requires a cautious, methodical method to make sure that the mannequin is educated successfully, performs effectively, and aligns with its supposed software. Whether or not you’re constructing a customized mannequin from scratch, fine-tuning a pre-trained GPT mannequin, or adapting it for a selected activity, following greatest practices will show you how to obtain optimum efficiency, scalability, and effectivity.

Right here’s a complete take a look at one of the best practices for constructing GPT fashions:

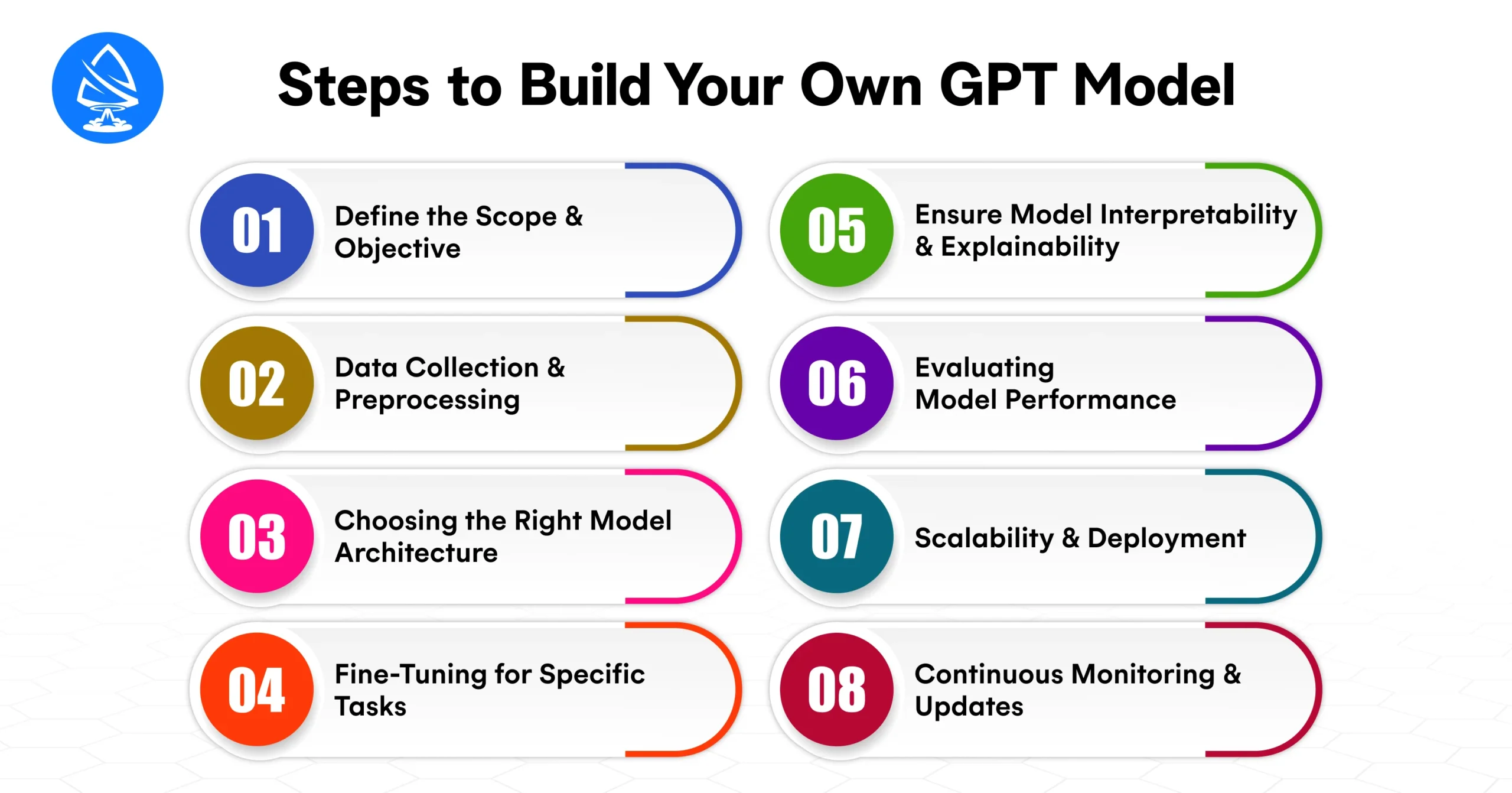

1. Outline the Scope and Goal

Earlier than diving into the technical points of constructing your GPT mannequin, it’s important to obviously outline the target and scope of the challenge. Understanding what the mannequin will likely be used for helps information the event course of and ensures that the mannequin aligns with particular wants and objectives.

Key Issues:

- Job-specific aims: Are you utilizing GPT for textual content era, summarization, query answering, or language translation?

- Audience: Is the mannequin geared toward end-users (e.g., chatbots, content material creation) or enterprise functions (e.g., monetary evaluation, market predictions)?

- Efficiency objectives: Are you aiming for pace, accuracy, or scalability in your mannequin?

Defining these parameters early within the course of ensures that the mannequin is constructed to satisfy real-world necessities, avoiding over-complication or wasted sources.

2. Knowledge Assortment and Preprocessing

The standard of your information considerably impacts the efficiency of your GPT mannequin. Constructing a robust GPT mannequin requires coaching it on large datasets that mirror the language and activity you’re focusing on.

Key Greatest Practices for Knowledge Assortment:

- Supply Various Knowledge: Make sure the dataset is various and complete, masking a broad spectrum of textual content from books, articles, social media, web sites, and domain-specific information if required. The extra various the information, the extra strong the mannequin will likely be in understanding completely different matters, contexts, and languages.

- Knowledge Relevance: Acquire information that’s related to the appliance. For instance, if constructing a chatbot for healthcare, deal with medical literature, analysis papers, and healthcare communication.

- Knowledge Cleansing: Preprocess the information to take away noise (e.g., irrelevant content material, duplicates, and formatting points). Clear textual content helps the mannequin deal with studying from invaluable, high-quality content material.

- Tokenization: Guarantee environment friendly tokenization, which breaks down textual content into manageable models (tokens). Tokenization is a vital preprocessing step that helps the mannequin be taught significant relationships between phrases and phrases.

Instance:

For a customer support chatbot, collect dialog information, buyer suggestions, and product-related FAQs to make sure the mannequin can generate significant, context-aware responses.

3. Selecting the Proper Mannequin Structure

Whereas GPT-3 and GPT-4 have set benchmarks for generative language fashions, there are a number of structure choices accessible on your challenge relying in your useful resource constraints, efficiency wants, and objectives.

Issues:

- GPT-2 vs. GPT-3 vs. GPT-4: You probably have restricted sources, GPT-2 is perhaps adequate for many functions. Nonetheless, GPT-3 and GPT-4 are extra highly effective and able to dealing with bigger, extra advanced duties.

- Mannequin Dimension: Select a mannequin measurement that matches your information and computational sources. Whereas massive fashions like GPT-3 have billions of parameters, smaller variations could also be extra environment friendly for specialised duties or companies with fewer sources.

- Customizing Pretrained Fashions: Advantageous-tuning a pre-trained mannequin like GPT-2 or GPT-3 is commonly extra environment friendly than coaching from scratch, particularly in case you are working with a smaller dataset. Advantageous-tuning ensures that the mannequin performs effectively on particular duties with out requiring large computational energy.

4. Advantageous-Tuning for Particular Duties

Advantageous-tuning is without doubt one of the most crucial points of constructing a GPT mannequin that performs effectively in a selected context. This includes taking a pre-trained mannequin and adjusting its weights and parameters utilizing your personal domain-specific information.

Greatest Practices for Advantageous-Tuning:

- Small Datasets: Advantageous-tuning is particularly helpful when you’ve a restricted dataset for a selected area or activity. It’s typically more cost effective than coaching a mannequin from scratch.

- Keep away from Overfitting: Advantageous-tuning could cause the mannequin to overfit when you prepare it on a small or very particular dataset. To keep away from overfitting, think about using regularization strategies or early stopping throughout the coaching course of.

- Job-Particular Knowledge: When fine-tuning, be certain that your coaching information is tailor-made to the mannequin’s supposed use. For instance, when you’re fine-tuning for sentiment evaluation, guarantee your information consists of labeled sentiment-based textual content.

Instance:

For constructing a buyer help AI chatbot, fine-tune GPT utilizing dialog logs, widespread buyer queries, and product info to make sure it understands domain-specific language and responses.

5. Guarantee Mannequin Interpretability and Explainability

Whereas GPT fashions are extremely highly effective, they’re also known as “black-box” fashions as a result of their decision-making processes aren’t at all times simple to interpret. Guaranteeing some degree of interpretability is essential, particularly in industries like finance or healthcare, the place transparency is vital.

Key Steps:

- Mannequin Explainability: Implement strategies to elucidate mannequin predictions, equivalent to consideration mechanisms that spotlight which components of the enter textual content have been most influential within the mannequin’s decision-making.

- Consumer Suggestions Loops: Incorporate human suggestions throughout coaching to right misinterpretations and make sure the mannequin aligns with human expectations, particularly when utilized in delicate functions.

Instance:

In healthcare functions, AI techniques should present explanations for his or her suggestions, such because the reasoning behind a prognosis or remedy suggestion.

6. Evaluating Mannequin Efficiency

As soon as your GPT mannequin is educated or fine-tuned, it’s vital to judge its efficiency earlier than deployment. The mannequin shouldn’t solely generate textual content but additionally meet particular efficiency metrics that align with your enterprise or challenge objectives.

Analysis Metrics:

- Perplexity: This can be a commonplace measure of how effectively the mannequin predicts the subsequent phrase in a sequence. Decrease perplexity signifies higher efficiency.

- BLEU Rating: For duties like machine translation or textual content summarization, the BLEU rating measures the standard of textual content era by evaluating it to human-created references.

- Accuracy and Precision: For duties like classification or sentiment evaluation, consider the mannequin’s accuracy, precision, and recall.

Instance:

For a content material era instrument, consider the mannequin on its potential to create coherent, contextually correct, and grammatically right articles. You should use human evaluators or automated metrics to evaluate the standard.

7. Scalability and Deployment

After your GPT mannequin is fine-tuned and evaluated, it’s time for deployment. GPT fashions can require substantial computational energy, particularly when scaling for manufacturing environments.

Greatest Practices for Deployment:

- Cloud Infrastructure: Deploy your mannequin on cloud platforms like AWS, Google Cloud, or Microsoft Azure for scalability and entry to highly effective GPUs and TPUs.

- APIs for Entry: Expose the GPT mannequin through REST APIs or GraphQL to permit exterior functions and techniques to work together together with your mannequin.

- Optimizing Efficiency: Use mannequin optimization strategies like quantization or distillation to cut back the dimensions and improve the effectivity of the mannequin with out sacrificing accuracy.

Instance:

For an enterprise-level chatbot system, deploy your GPT mannequin through API endpoints to combine with the corporate’s customer support platform and scale to deal with tens of millions of consumer queries each day.

8. Steady Monitoring and Updates

AI fashions, together with GPT, require common updates and monitoring to make sure they proceed to carry out effectively as information and circumstances change.

Key Issues:

- Monitoring Efficiency: Repeatedly monitor the mannequin’s accuracy, latency, and consumer suggestions to establish areas of enchancment.

- Knowledge Drift: As real-world information evolves, the mannequin might expertise information drift. Repeatedly retrain the mannequin with new information to keep up its relevance and accuracy.

- Consumer Suggestions Integration: Incorporate consumer suggestions to enhance the mannequin over time and adapt to new wants or challenges.

Conclusion

Constructing your personal GPT mannequin gives unbelievable alternatives to customise and optimize AI-driven textual content era techniques on your particular wants. By leveraging open-source frameworks and pre-trained fashions, it can save you time and sources whereas nonetheless benefiting from the ability of AI. Whether or not you’re a researcher, entrepreneur, or enterprise chief, mastering the event of GPT fashions opens up new prospects for innovation and automation.

Able to construct your personal GPT mannequin? Companion with a customized AI improvement firm or rent AI builders to deliver your GPT-based initiatives to life. Use our Value Calculator to estimate your AI improvement challenge and begin creating your mannequin in the present day!

Often Requested Questions

1. What’s a GPT mannequin?

A GPT mannequin is a sort of transformer-based deep studying mannequin used for pure language processing (NLP) duties, equivalent to textual content era, summarization, and translation.

2. Do I would like to coach my very own GPT mannequin?

It is determined by your use case. You’ll be able to fine-tune an current GPT mannequin for particular duties, or prepare one from scratch when you’ve got the required sources and a big dataset.

3. How a lot information do I would like to coach a GPT mannequin?

Coaching a GPT mannequin from scratch requires large datasets, usually within the vary of tens of gigabytes or extra. Nonetheless, fine-tuning an current mannequin requires a lot much less information.

4. Can I take advantage of GPT fashions for duties aside from textual content era?

Sure, GPT fashions will also be used for query answering, summarization, and language translation, amongst different NLP duties.

5. What are the system necessities for coaching a GPT mannequin?

To coach a GPT mannequin, you want high-performance GPUs or TPUs. For smaller-scale fashions, a GPU with at the least 16GB VRAM is really useful.

6. How lengthy does it take to coach a GPT mannequin?

Coaching time is determined by elements just like the mannequin measurement, dataset measurement, and {hardware} used. Advantageous-tuning can take a couple of hours to days, whereas coaching from scratch can take weeks or months.

7. Can I combine GPT fashions into my functions?

Sure, you possibly can combine GPT fashions into net apps, chatbots, customer support techniques, and extra through APIs or direct deployment.

8. Are there open-source GPT fashions accessible?

Sure, fashions like GPT-2 and GPT-3 (through OpenAI API) can be found for fine-tuning and integration into your functions.